Let’s be honest. The quantum computing buzz can feel abstract, a distant sci-fi problem. But here’s the deal: the cryptographic bedrock of our digital world—the RSA and ECC algorithms that secure everything from your HTTPS sessions to your encrypted backups—is facing a future existential threat. Post-quantum cryptography (PQC) is the answer, and implementing it is shifting from a theoretical exercise to a practical, near-future necessity for developers.

This guide isn’t about the deep math (thankfully). It’s about the hands-on, gritty process of getting PQC into your projects. Think of it like retrofitting a historic building for a new kind of earthquake. The structure needs to stand, but the reinforcements have to be woven in carefully.

Why “Harvest Now, Decrypt Later” Changes Everything

First, a crucial mental shift. The biggest immediate risk isn’t a quantum computer appearing tomorrow. It’s the “harvest now, decrypt later” attack. Adversaries are already collecting and storing encrypted data today, betting they can crack it open with a quantum machine in 5, 10, or 15 years. That means data with a long shelf-life—medical records, state secrets, intellectual property—is already vulnerable.

So, waiting for a quantum computer to be announced is like waiting for the storm to hit before you buy lumber for shutters. The time for implementation planning is, well, now.

The NIST Standardization Marathon: Your New Toolkit

After a lengthy competition, the U.S. National Institute of Standards and Technology (NIST) has selected the first batch of PQC algorithms. This is your new toolkit. The landscape breaks down into two main categories replacing current public-key cryptography:

- CRYSTALS-Kyber (Key-Establishment): Your new go-to for key exchange. It’s structured-lattice-based and the primary algorithm NIST recommends for general encryption. Think of it as the quantum-resistant successor to Diffie-Hellman or RSA for key agreement.

- CRYSTALS-Dilithium, FALCON, SPHINCS+ (Digital Signatures): These replace algorithms like ECDSA and RSA signatures. Dilithium is the main recommended signature, with FALCON for smaller signatures and SPHINCS+ as a stateless hash-based fallback (super secure, but bigger and slower).

Honestly, for most developers starting out, focusing on Kyber for key exchange and Dilithium for signatures is a solid, future-proof bet.

The Implementation Playbook: A Phased Approach

Diving headfirst into a full PQC swap is a recipe for breakage. Here’s a more sensible, phased playbook.

Phase 1: Discovery & Crypto-Agility Audit

Before writing a line of new code, map your cryptographic dependencies. Where does your app use TLS? Where are digital signatures verified (JWT tokens, code signing)? What libraries handle encryption? Use automated tools and manual review to create an inventory.

The goal here is to assess your “crypto-agility“—your system’s ability to swap out cryptographic algorithms without a full rebuild. If your crypto is hardcoded in a dozen places with no abstraction, you’ve got work to do. Think of it as untangling a knotted necklace before you can replace the pendant.

Phase 2: The Hybrid Bridge

This is the most critical, practical step for current post-quantum cryptography implementation. You don’t turn off the old algorithms yet. Instead, you run them alongside the new PQC ones.

For example, in a TLS handshake, a hybrid mode might combine an X25519 (classic) key share with a Kyber (PQC) key share. Both are used to derive the session key. The security? It falls back to the classic algorithm if the PQC one is later broken. It’s a safety belt. Major libraries like OpenSSL are already starting to support these hybrid modes, which makes this phase more accessible than you’d think.

Phase 3: Library Integration & The Testing Grind

You’re not writing these algorithms from scratch. Please, don’t. Use established, vetted libraries. The liboqs project from Open Quantum Safe is a great starting point, providing C implementations with bindings for Python, Go, and more. Soon, you’ll see these algorithms native in OpenSSL and other core crypto libraries.

Testing is where the real work happens. PQC algorithms have different characteristics:

| Algorithm | Key Size (vs. RSA 2048) | Signature Size | Primary Consideration |

| CRYSTALS-Kyber | Larger | N/A | Fast, but bigger payloads |

| CRYSTALS-Dilithium | Larger | ~2-4KB | Balanced performance/size |

| SPHINCS+ | Small | ~8-50KB | Huge signatures, very slow |

You need to test for:

– Performance: More CPU cycles, more memory.

– Bandwidth: Larger keys and signatures mean bigger network packets.

– Latency: Will that extra 15ms in a handshake break a user experience?

– Storage: Can your database handle storing millions of larger signatures?

Common Pitfalls & The Human Factor

Sure, the tech is complex. But the human and process hurdles trip up more teams. Watch for these:

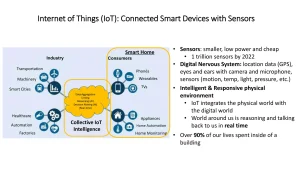

- Legacy System Inertia: That mainframe or embedded IoT device from 2015 might not have the computational headroom. This forces tough decisions about risk and upgrade cycles.

- Protocol Incompatibility: Some older protocols have hardcoded field sizes. A giant PQC public key might just not fit. You might need to update or replace the protocol itself—a bigger lift.

- The “Set It and Forget It” Myth: PQC is a moving target. NIST is already on round 4 for additional algorithms. Implementation isn’t a one-time checkbox; it’s adopting a mindset of continuous cryptographic evolution.

Your Actionable Starting Points

Feeling overwhelmed? Start small and concrete.

- Experiment in a Lab: Spin up a test server using a hybrid TLS mode. Use the Open Quantum Safe project’s patches for OpenSSL or test with a library like liboqs. Break things where it doesn’t matter.

- Prioritize by Data Lifespan: Identify the data in your systems that has the longest sensitivity lifespan. That’s your “crown jewels” data and your PQC implementation priority #1.

- Abstract Your Crypto: If you haven’t, build a thin abstraction layer around your cryptographic operations. This single change will make any future migration—quantum or otherwise—infinitely easier.

Look, this transition won’t happen overnight. It’ll be a slow, iterative weave of new algorithms into the vast, tangled fabric of our digital infrastructure. There will be bugs, performance regressions, and headaches.

But the developers who start understanding these tools now, who begin plotting their migration path, won’t just be securing data for a distant future. They’ll be building more agile, resilient, and forward-looking systems for tomorrow. And that, in the end, is just good engineering.

More Stories

The Rise of Ambient Computing and Invisible Interfaces: When Technology Fades Into the Background

Integrating Digital Twin Technology for Small-Scale Manufacturing and Logistics

Smart Home Automation for Renters: Your Guide to a Smarter, Temporary Space